Max Niederhofer recently wrote a very thoughtful piece reflecting on the psychology of venture capital called “Fear and loathing in Venture Capital”. One of the core points he discusses is how difficult it is for VCs to learn because of the painfully slow feedback cycles on our investment decisions.

According to VentureBeat, the median time for an early stage venture investor to get an exit is 8.2 years. Note that this number is an average, many of the big exits take even longer. Over an 8–10-year period there are also an enormous amount of external factors that can affect a company, its market and team that could interfere with you getting useful feedback. Furthermore, investors then base their learnings on a relatively tiny number of portfolio companies, a fact that would appal any statistician.

In this analysis, I’m hoping to propose a method for venture investors to reduce the learning cycles and base those faster learnings on far more data points.

At Frontline we are exposed to roughly 1,500 companies every year and we record a lot of data on all of them. Since Frontline’s inception we have tracked the industries, locations, life-stage of the company, how we saw it, who on our team took lead, how far it got in our pipeline, why we decided to invest, why we decided not to invest, our opinion of their founders, and since 2016, also the perceived gender and ethnicity of the founders.

Tomasz Tungus at RedPoint Capital consistently discusses using data for better decision making in companies and I felt using some of this data could be a great way to bring more data to our venture decision making. So rather than basing my VC learning’s on my 15 portfolio investments over a 10–12 year period, as almost all VCs do, instead, I could learn from the hundreds or thousands of companies I’ve not invested in, on an ongoing basis, as well.

Over the past six years, I have personally seen 2,684 companies that are b2b, seed stage and based in Europe. Of those, I’ve met in person or had a call with almost 600. Unlike the larger number, these 600 are companies where I’d at least spent time speaking with the founders and understanding the company, so I felt it was a better data set to use for this analysis.

The Analysis

I paired the dataset of c. 600 companies and why I said no to them with publically available data on the total amount each company raised since we passed and divided by the number of months since then. This allowed me to roughly compare the many companies on a level playing field, regardless of when I saw them. eg.

- Company A I saw 12 months ago raised £1.2m since (so £100k per month)

- Company B I saw 48 months ago raised £1.2m since (so £25k per month)

(I know capital raised does not necessarily correlate with success but I felt that across a data set of 600 companies it was a good enough indicator of success for me to start to base learnings on and an easy way to compare them with each other.)

I then grouped all the companies into the different reasons that I had put in our system for passing on them:

- Weak Founding Team

- Small Market

- Competitive Market

- Product / Technology

- Business Model

- Price (i.e. valuation was too high)

- Speed (i.e. deal closed too fast for us)

(One important point to make is that our dealflow system makes us record these reasons at the time we make them, so hindsight bias is not a factor here. In fact, on many occasions when reviewing the data, the reason I thought I remembered for rejecting a company was not what I had written at the time)

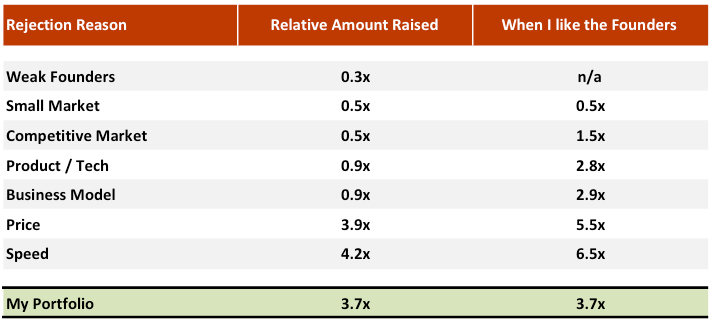

I re-based (or normalised) all the groupings to the average of all the companies in the analysis. The average raised per company per month, across the entire dataset, was $89k. The average raised per month across all companies in the ‘Weak Founding Team’ category was $27k. So if the average ($89k) is based to 1, then the average company in the weak team category has raised 0.3x (a little less than a third) of the average company in the whole data set.

When rejecting companies in the past, I also made a note of the teams that were particularly strong. You’ll see below, on the right-hand side of the chart, the comparison of capital raised since for each category when I liked the team a lot.

Finally, I put the results of my portfolio as a point of comparison.

The Results

Weak Founding Team

It will not be news to most VCs reading this that the companies that I considered to have the weakest teams, raised the least. As you can see from above Weak Founders have only raised 30% of the average and a 12th of the capital that my portfolio has. I’ve heard many seasoned VCs at conferences talk about the importance of team but I’ve yet to see any data-based analysis showing it makes a difference.

On the right-hand side of the chart, you can see each rejection reason but just selecting the companies where I marked in our system as liking the founders a lot. They on average raised 2–3x the average of the respective groupings.

Consensus has it that strong teams are critical in determining company success, yet I was never fully comfortable that I was correctly choosing between strong and weak founding teams. Having now completed this analysis, I am confident that I can trust my practices here.

Small Market and Competitive Market

Another generally accepted belief in our industry is, ‘don’t invest in small or competitive markets’. Again, my analysis would back this up. The ‘small market’ and ‘competitive market’ companies raised less than half the average and an 8th of what my portfolio has raised.

Interestingly where small and competitive markets differ quite a lot is when you look at the companies with great teams in each category. Small markets with great teams still go on to raise very little while competitive market companies with a great team can go on to raise 1.6x times the average, and half of what my portfolio has raised.

My main two learnings here are:

a) We should not be investing in small markets, even with a great team and,

b) we should avoid investing in companies with highly competitive markets, unless we think the team is incredible

Product/tech and business model

The results for Product/Tech and Business model were close to the average. This would lead me to believe two things.

Firstly, that at the seed stage, product and business model are not good reasons for rejecting a company. c.50% of the investments we make at Frontline are pre-product and c. 75% are pre-revenue so potentially it is just not that relevant at this stage of a company’s development to have fully understood their business model and their technology. Potentially with a great team and an exciting market they will figure product and business model out? This is seen when you look at the same group with great teams. They each have raised nearly 3x the average which is getting quite close to how my portfolio has performed, suggesting that team as a factor is substantially more important than either of business model and/or technology.

Secondly it is also possible that technology and business model are relevant at the seed stage but that I am personally, not good at picking the good from the bad. This is an area where I will exercise considerable caution from here on out. I will seek more advice and support from my team, and I will continue to dig deeper during my due diligence process.

Price and Speed

Companies that have been rejected for either being priced too high or when the deal was moving too fast for us have gone on to raise more than my portfolio.

Frontline has always tried our best to price investments at what we feel are fair prices reflecting where the team, product and market is. We believe that once new entrepreneurs understand how we add value through Platform and what our entrepreneurs say about us, we will win almost all of our deals. While we have not lost an investment to a VC that was pricing a deal at a similar level as us, we have lost deals to other VC who were pricing it materially higher than us. My analysis would argue for us to be less price-sensitive. In reality, this analysis does not take into account the equity stake we as an investor will get and this is a big part of our eventual outcome from a company’s exit. Highly priced deals by their very nature mean the VC gets less equity stake (or has to pay a lot more). I have slightly relaxed my price sensitivity since doing this analysis but I still strongly believe that Frontline should be able to win the best deals based on a fair valuation, our history of supporting our companies, and what our entrepreneurs say about us — vs simply offering a higher price tag.

Speed, on the other hand, is much more interesting. When I first did this analysis over nine months ago, it was clear that there were some great companies I had missed because I was simply not fast enough. The solution might seem simple; just make faster decisions, but we are managing other people’s capital and making quick investment decisions without doing proper due diligence is reckless. Equally not moving fast enough makes me miss great opportunities. So in Frontline, we changed our internal process. We believe that if we are in a position to be one of the top choices of an entrepreneur, even the fastest moving deals will always be able to wait one week for us to get to an answer. So now if a Partner thinks a great deal is about to be missed they are able to get all the other Partners to cancel anything non-compulsory in their calendar over the next week to get the due diligence, calls, meetings, investment committee etc. done within 5–7 days. This way Frontline can be confident that we not making reckless decisions with our LP’s capital and still, and still move fast enough to be part of the fastest moving deals. We have already used this process and won some great potential investments thanks to it.

COnclusions

While this analysis is not a perfect comparison for successful exits it does allow me to adjust and learn from my investment or rejection decisions sooner and on a more ongoing basis, substantially reducing my learning cycles as a venture investor.

It has already solidified my previously held views on weak founders or small markets, questioned my view on how I evaluate business models and products and changed some of our internal thinking with how fast we can get to a decision.

At Frontline we are believers in continuously improving our team and practices as a VC. The analysis above is just one of the many ways we use data to do this. For example… We monitor all investments in our target areas and whether we see them or not, and if not, then why not? We monitor the sources of the higher and lower quality deals that we get in our pipeline. We monitor the percentage of female-founded companies to see if different initiatives we do to promote female entrepreneurs are making a difference etc…These are just some examples of how we use data. If people like this post, I’d be happy to elaborate on some of the other methods we use.

Any questions on the outcomes, method for the analysis, how I collected the data, further ideas for me to analyze, or critiques on it, please feel free to comment below, or reach out directly and let me know. [email protected].

P.s. My opening image was inspired by a comment and image made by Sarah Tavels in Benchmark Capital in a blog where she funnily advised graduates to seek jobs with short learnings cycles but lamented how long it was in VC.